Abstract

Homeless people and people who have only recently escaped homelessness are at risk of being malnourished due to several factors, one of which is limited knowledge of cooking. Cooking recipe recommendation systems are one technology-based approach to provide information to such populations that can assist them with making more informed choices on nutrition. With the increasing capabilities of Artificial Intelligence (AI) technologies, there are now also AI-based cooking recipe recommendation systems. However, recipe recommendation systems do not provide any guidance on how to cook for a person who has limited experience with cooking. In particular, persons with cognitive disabilities or other mental health disorders can get sidetracked during cooking and forget to return to the cooking activity. This work describes a system that acts as a smart cooking assistant. The main idea is for the system to observe the user perform the steps of cooking based on a recipe, and then provide automatic reminders on when to move to the next step. The system consists of both hardware and machine learning-based software components. The hardware consists of a camera, infrared thermal camera, and temperature sensor. These are integrated around a Raspberry Pi mini-computer. This suite of sensors is mounted over a stovetop and constantly monitors the cooking area, specifically the area where a cooking pot is over the stovetop. Convolutional Neural Network-based image processing algorithms are used to analyze the sequence of images from the cameras to identify in what stage of cooking the user is currently performing.

Image Processing

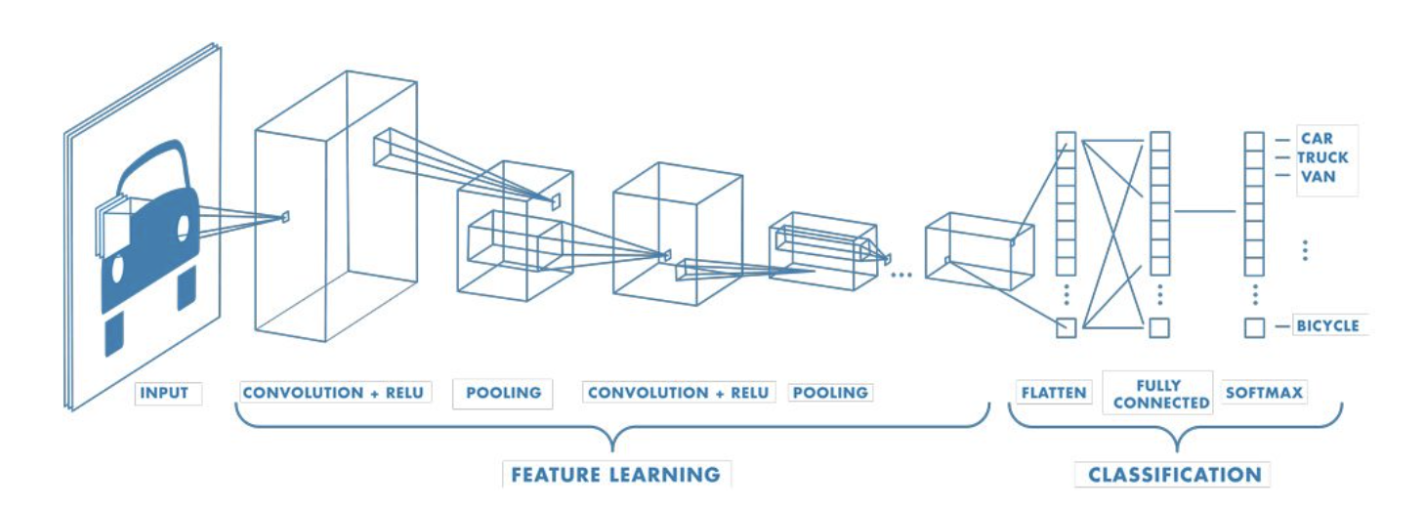

The cooking stage identification problem is an image classification problem and to tackle this problem we are using a pre-trained model called “SSD MobileNet V2 FPNLite 320x320” which is a variant of Tensorflow-2 Model Zoo object detection API [13]. This pre-trained model is a Single Shot Detector with Mobile net architecture and uses the feature pyramid network as feature extractor that is pre-trained on the COCO dataset. The MobileNet is a base network that provides high-level features for classification or detection while the detection layer employs the SSD method which is a feed-forward convolutional network to generate a predetermined set of bounding boxes and associated scores, indicating the presence of object class instances within those boxes which is faster than R-CNN and other models. It is also lightweight and can efficiently perform computation on mobile and embedded devices. It uses depthwise separable convolutions and residual connections to reduce the number of parameters and improve the performance of the network. For this project we are testing the model for a simple recipe: cooking pasta. We divided the cooking steps for this recipe into 6 stages that can be identified by the objects on the stovetop: “Empty burner”, “Empty pot”, “Pot with water”, “Pot with boiling water”, “Pot with pasta”, and “Pot with cooked pasta”.

Below is the picture of SSD MobileNet Layout

Image Collection

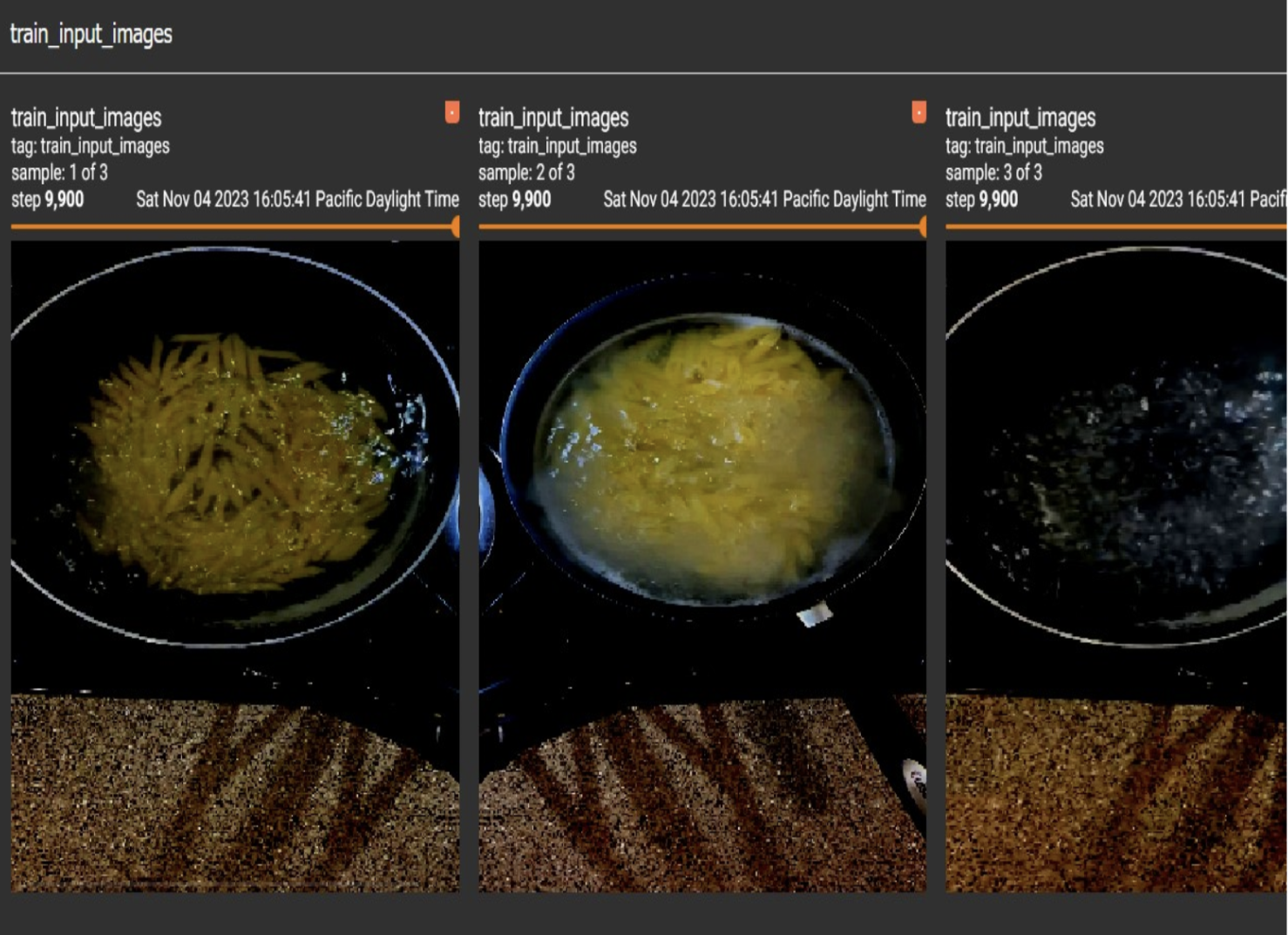

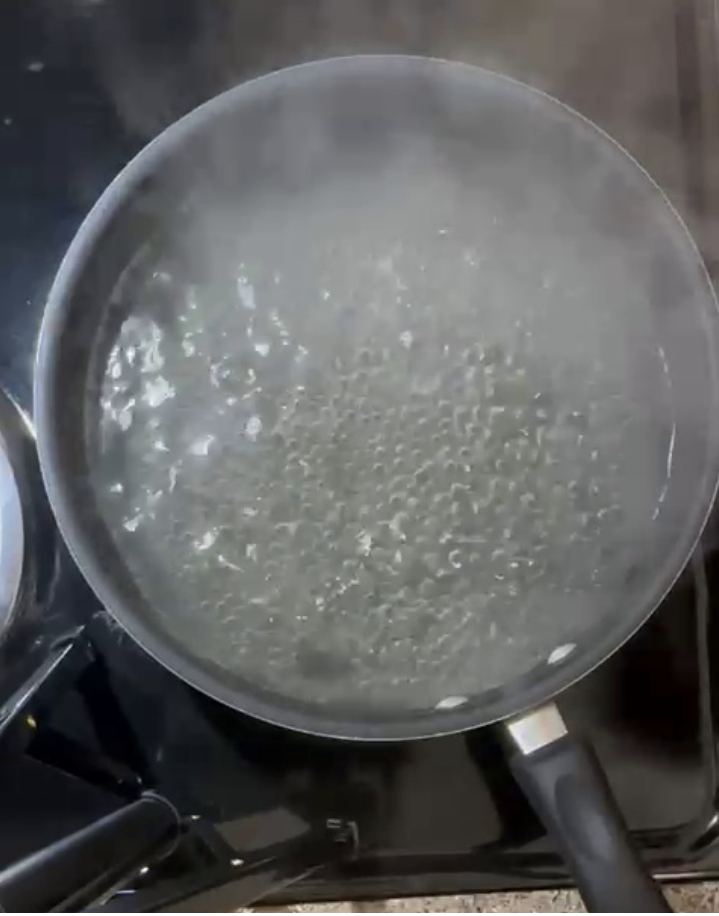

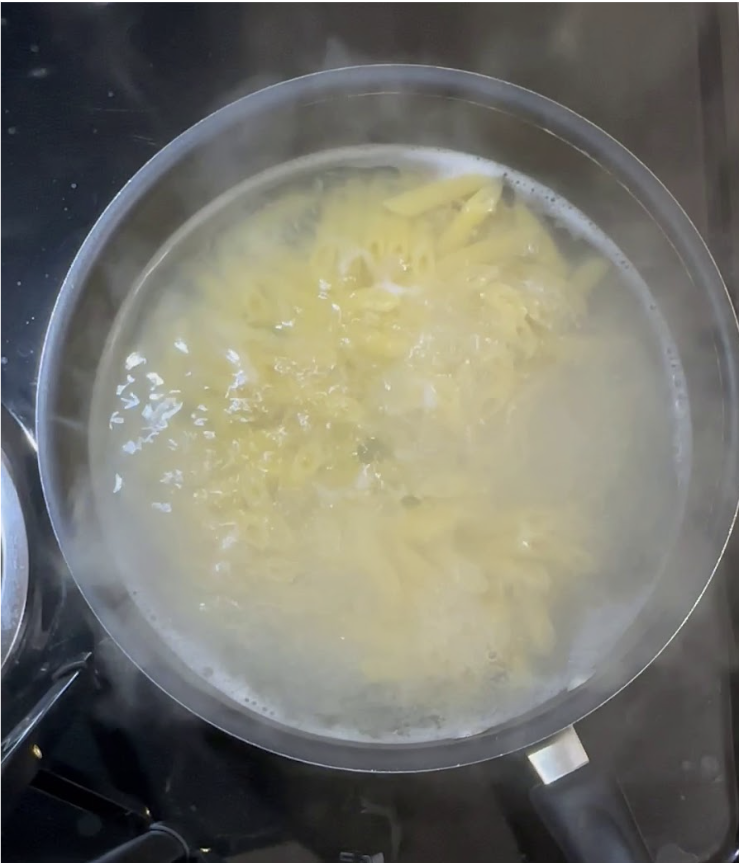

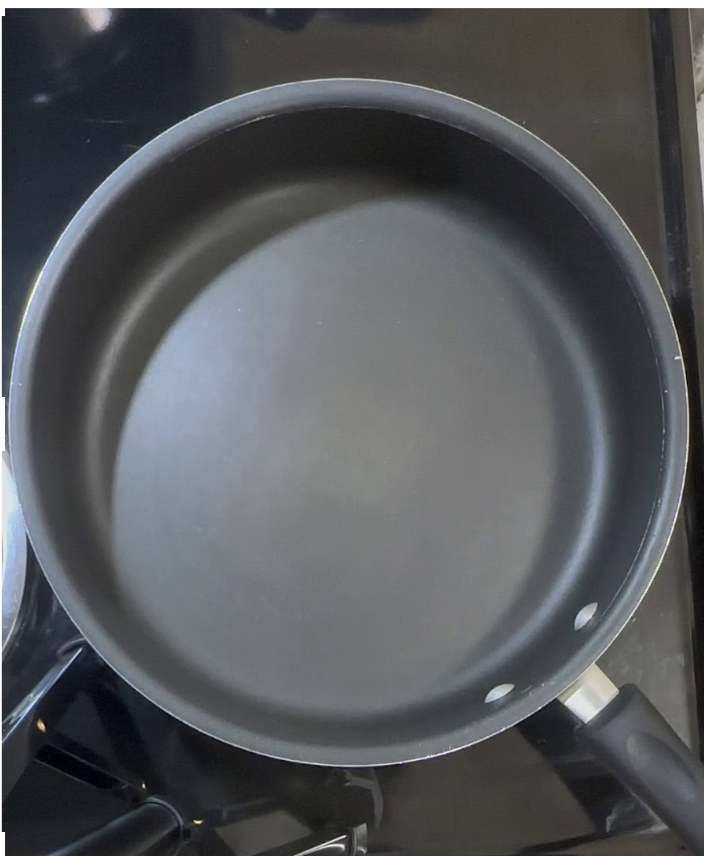

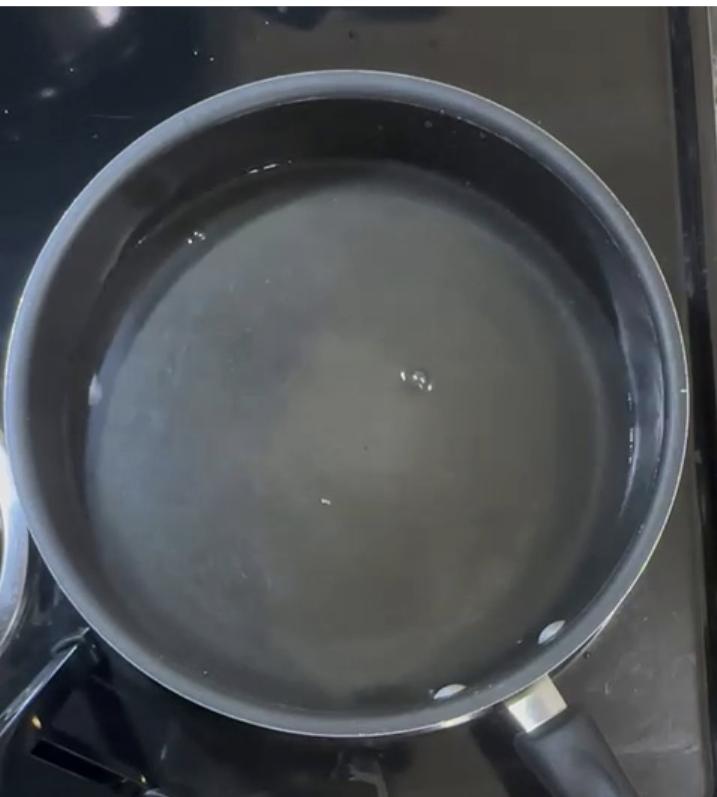

The collection of images for the dataset and labeling them is non trivial and when creating labels we need to consider scalability of the model for the future. For our present study we tried to detect several stages in cooking pasta and create labels for the collected images accordingly. Since our study focuses on creating an object detection model for supportive housing, the images are collected from a video recording that shows how to cook pasta and the parameters like orientation of the camera, type of pans used are kept identical to those of the supportive housing to better emulate the desired conditions and extract the frames using a python script. The dataset consists of 300 images which are categorized based on the stages of cooking pasta and what the camera sees at each and every instance namely “Empty burner”, “Empty pot”, “Pot with water”, “Pot with boiling water”, “Pot with pasta”, “Pot with cooked pasta”. Sample images from these classes are shown in Fig. 5.

Sample collected images for Empty burner, Pot with boiling water, Pot with Cooked pasta, Empty pot, Pot with pasta, Pot with water respectively.

Labeling Images

The collected images are then annotated using the “LabelImg” package that allows us to manually encapsulate the portion of the image we want to label and give the encapsulated portion its appropriate label. This step is illustrated in Fig. 6. This process creates an XML file of the image we labeled. The dataset is then split into test and train along with their annotation.

Sample image depicting labeling using “LabelImg” package.